Researchers have developed a sensor that can be implanted anywhere in the body, for example under the tip of a severed finger; the sensor connects to another nerve that functions properly and restores tactile sensation to the injured nerve.

Select all

Engineering

Social Sciences

Psychology

Medicine

Life Sciences

General

Select all

Research

Prize

Innovations

In Focus

Events

Party

Conference

Ceremony

Event

Focus

Nomination

Research

Researchers have developed a sensor that can be implanted anywhere in the body, for example under the tip of a severed finger; the sensor connects to another nerve that functions properly and restores tactile sensation to the injured nerve.

Tel Aviv University's new and groundbreaking technology inspires hope among people who have lost their sense of touch in the nerves of a limb following amputation or injury. The technology involves a tiny sensor that is implanted in the nerve of the injured limb, for example in the finger, and is connected directly to a healthy nerve. Each time the limb touches an object, the sensor is activated and conducts an electric current to the functioning nerve, which recreates the feeling of touch. The researchers emphasize that this is a tested and safe technology that is suited to the human body and could be implanted anywhere inside of it once clinical trials will be done.

The technology was developed under the leadership of a team of experts from Tel Aviv University: Dr. Ben M. Maoz, Iftach Shlomy, Shay Divald, and Dr. Yael Leichtmann-Bardoogo from the Department of Biomedical Engineering, Fleischman Faculty of Engineering, in collaboration with Keshet Tadmor from the Sagol School of Neuroscience and Dr. Amir Arami from the Sackler School of Medicine and the Microsurgery Unit in the Department of Hand Surgery at Sheba Medical Center. The study was published in the prestigious journal ACS Nano.

The researchers say that this unique project began with a meeting between the two Tel Aviv University colleagues – biomedical engineer Dr. Maoz and surgeon Dr. Arami. “We were talking about the challenges we face in our work,” says Dr. Maoz, “and Dr. Arami shared with me the difficulty he experiences in treating people who have lost tactile sensation in one organ or another as a result of injury. It should be understood that this loss of sensation can result from a very wide range of injuries, from minor wounds – like someone chopping a salad and accidentally cutting himself with the knife – to very serious injuries. Even if the wound can be healed and the injured nerve can be sutured, in many cases the sense of touch remains damaged. We decided to tackle this challenge together, and find a solution that will restore tactile sensation to those who have lost it.”

In recent years, the field of neural prostheses has made promising developments to improve the lives of those who have lost sensation in their limbs by implanting sensors in place of the damaged nerves. But the existing technology has a number of significant drawbacks, such as complex manufacturing and use, as well as the need for an external power source, such as a battery. Now, the researchers at Tel Aviv University have used state-of-the-art technology called a triboelectric nanogenerator (TENG) to engineer and test on animal models a tiny sensor that restores tactile sensation via an electric current that comes directly from a healthy nerve and doesn’t require a complex implantation process or charging.

The researchers developed a sensor that can be implanted on a damaged nerve under the tip of the finger; the sensor connects to another nerve that functions properly and restores some of the tactile sensation to the finger. This unique development does not require an external power source such as electricity or batteries. The researchers explain that the sensor actually works on frictional force: whenever the device senses friction, it charges itself.

The device consists of two tiny plates less than half a centimeter by half a centimeter in size. When these plates come into contact with each other, they release an electric charge that is transmitted to the undamaged nerve. When the injured finger touches something, the touch releases tension corresponding to the pressure applied to the device – weak tension for a weak touch and strong tension for a strong touch – just like in a normal sense of touch.

The researchers explain that the device can be implanted anywhere in the body where tactile sensation needs to be restored, and that it actually bypasses the damaged sensory organs. Moreover, the device is made from biocompatible material that is safe for use in the human body, it does not require maintenance, the implantation is simple, and the device itself is not externally visible.

According to Dr. Maoz, after testing the new sensor in the lab (with more than half a million finger taps using the device), the researchers implanted it in the feet of the animal models. The animals walked normally, without having experienced any damage to their motor nerves, and the tests showed that the sensor allowed them to respond to sensory stimuli. “We tested our device on animal models, and the results were very encouraging,” concludes Dr. Maoz. “Next, we want to test the implant on larger models, and at a later stage implant our sensors in the fingers of people who have lost the ability to sense touch. Restoring this ability can significantly improve people’s functioning and quality of life, and more importantly, protect them from danger. People lacking tactile sensation cannot feel if their finger is being crushed, burned or frozen.”

Research

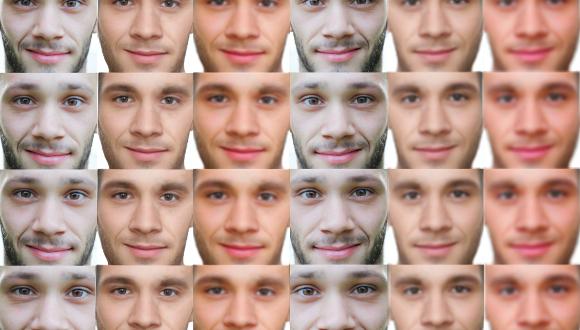

Deep Fake Technologies Create New Reality

Technology has proven time and again to have had such a beneficial effect on our world, whether that be creating food sources, clean water, or even curing the coronavirus. However, the Deep Fake technology, like many other innovative tools, if in the wrong hands, could pose a tremendous threat to the world.

Deep Fake is an Artificial Intelligence technology that can place one`s face in the video of another person (easy replica in videos, for example). Not only does it look real, it’s also harder to detect fraud, which can easily be used for identity, financial or social fraudulence.

Prof. Irad Ben-Gal from the Department of Industrial Engineering, the head of The Laboratory for AI, Machine Learning, Business & Data Analytics (LAMBDA) was recently interviewed by Walla News on the risks of Deep Fake technologies during the elections. Prof. Ben-Gal explains that application of Deep Fake technologies can be legitimate and have a positive effect if properly used. Among other things, he discussed deep network models and the options nowadays to create accurate simulations of reality in applications such as smart cities and epidemiological models of infection.

The study

Conducted in collaboration with Dr. Erez Shmueli, from the Department of Industrial Engineering, this study showed the most effective ways of disseminating information was by using regular people in your social network who respond and discuss new opinions that you have just been exposed to. Opposite to celebrities, when regular users retell and circulate the new information, it creates local effect that makes the information more apparent on the web. This was the tactic that Cambridge Analytica used to target Facebook users during the first campaign of Trump.

This study was done as a follow up to the research of Dr. Alon Sela, who holds PhD from the Industrial Engineering Department at Tel Aviv University in collaboration with Prof. Shlomo Havlin and Dr. Louis Shechtman. They examined models of spreading opinions in modern society, and in their research, they compared two spreading mechanisms: “Word-of-Mouth” (WOM) and online search engines (WEB). In their study, the researchers applied modelling and real experimental results. This way, they compared opinions that people adopt through their friends and opinions they embrace when using a search engine based on the PageRank (and alike) algorithm.

The research findings showed that the opinions adopted through the use of WEB scheme is more homogenous. People using the search engines follow just a few dominant views without having a variety of different opinions, a phenomenon known as “the richer gets it all”. In contrast, WOM scheme produces more diverse opinions and their distribution.

Combining the physical world with the digital world

The “Digital Living 2030” project, spear-headed by Ben Gal and Professor Bambos at Stanford University, is concerned with connecting the digital world and the physical worlds as trends are analyzed about our future lives 10-15 years from now. Ben Gal explains that an avatar (digital character) can be created to represent a person in the digital space for a positive purpose. “Imagine an expert radiologist whose digital character has learned from all his past data about medical imaging so that others can work using his digital character when his physical character is no longer present, for example, when he sleeps at night.”

The “Digital Living 2030” project, spear-headed by Ben Gal and Professor Bambos at Stanford University, is concerned with connecting the digital world and the physical worlds as trends are analyzed about our future lives 10-15 years from now. Ben Gal explains that an avatar (digital character) can be created to represent a person in the digital space for a positive purpose. “Imagine an expert radiologist whose digital character has learned from all his past data about medical imaging so that others can work using his digital character when his physical character is no longer present, for example, when he sleeps at night.”

The research helps better understand the situation with the Deep Fake technology. Since the technology is available now for commercial use, it can be “used and abused” by anyone exposed to it. Fake videos can be produced in big amounts by ordinary people that are connected on social network and can affect each other`s opinion.

In the last days of an election campaign, this kind of activity can dramatically change the results, and “even if the fraud is detected at the end of the day, it will be too late.” – says Prof. Ben-Gal. “From the other side, having a diversity of opinions, new and not ordinary, is essential and enriching. If the application of technology is legitimate and reliable, it can enrich the body of human knowledge with diverse opinions.”

Loving the problem is the greatest way to invent

Research

Tel Aviv University researchers connect a real locust ear to a robot

A technological and biological development that is unprecedented in Israel and the world has been achieved at Tel Aviv University. For the first time, the ear of a dead locust has been connected to a robot that receives the ear’s electrical signals and responds accordingly. The result is extraordinary: When the researchers clap once, the locust's ear hears the sound and the robot moves forward; when the researchers clap twice, the robot moves backwards.

The interdisciplinary study was led by Idan Fishel, a joint master student under the joint supervision of Dr. Ben M. Maoz of the Iby and Aladar Fleischman Faculty of Engineering and the Sagol School of Neuroscience, Prof. Yossi Yovel and Prof. Amir Ayali, experts from the School of Zoology and the Sagol School of Neuroscience together with –, Dr. Anton Sheinin, Idan, Yoni Amit, and Neta Shavil. The results of the study were published in the prestigious journal Sensors.

The researchers explain that at the beginning of the study, they sought to examine how the advantages of biological systems could be integrated into technological systems, and how the senses of dead locust could be used as sensors for a robot. “We chose the sense of hearing, because it can be easily compared to existing technologies, in contrast to the sense of smell, for example, where the challenge is much greater,” says Dr. Maoz. “Our task was to replace the robot's electronic microphone with a dead insect's ear, use the ear’s ability to detect the electrical signals from the environment, in this case vibrations in the air, and, using a special chip, convert the insect input to that of the robot.”

To carry out this unique and unconventional task, the interdisciplinary team (Maoz, Yovel and Ayali) faced number of challenged. In the first stage the researchers built a robot capable of responding to signals it receives from the environment. Then, in a multidisciplinary collaboration, the researchers were able to isolate and characterize the dead locust ear and keep it alive, that is, functional, long enough to successfully connect it to the robot. In the final stage, the researchers succeeded in finding a way to pick up the signals received by the locust’s ear in a way that could be used by the robot. At the end of the process, the robot was able to “hear” the sounds and respond accordingly.

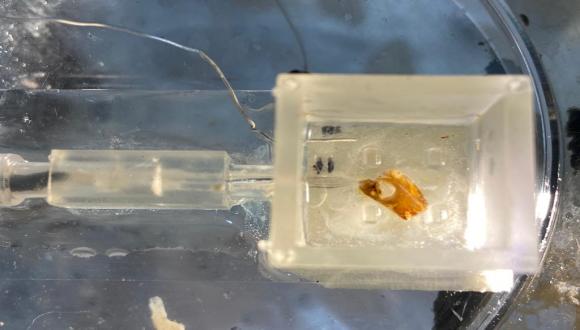

“Prof. Ayali’s laboratory has extensive experience working with locusts, and they have developed the skills to isolate and characterize the ear,” explains Dr. Maoz. “Prof. Yovel's laboratory built the robot and developed code that enables the robot to respond to electrical auditory signals. And my laboratory has developed a special device - Ear-on-a-Chip - that allows the ear to be kept alive throughout the experiment by supplying oxygen and food to the organ, while allowing the electrical signals to be taken out of the locust’s ear and amplified and transmitted to the robot.

“In general, biological systems have a huge advantage over technological systems - both in terms of sensitivity and in terms of energy consumption. This initiative of Tel Aviv University researchers opens the door to sensory integrations between robots and insects - and may make much more cumbersome and expensive developments in the field of robotics redundant.

“It should be understood that biological systems expend negligible energy compared to electronic systems. They are miniature, and therefore also extremely economical and efficient. For the sake of comparison, a laptop consumes about 100 watts per hour, while the human brain consumes about 20 watts a day. Nature is much more advanced than we are, so we should use it. The principle we have demonstrated can be used and applied to other senses, such as smell, sight and touch. For example, some animals have amazing abilities to detect explosives or drugs; the creation of a robot with a biological nose could help us preserve human life and identify criminals in a way that is not possible today. Some animals know how to detect diseases. Others can sense earthquakes. The sky is the limit.”

Research

The research provided by Dr. Ines Zucker of the Faculty of Engineering and The Porter School of Environmental Sciences shows that gaseous ozone can effectively disinfect Covid-19.

The Covid-19 pandemic has severely affected public health around the world leading to a global panic. It has been more than a year since countries worldwide are enforcing social distancing and isolation, cancelling flights, and asking millions of their countries’ inhabitants to get tested to prevent further spreading of the virus.

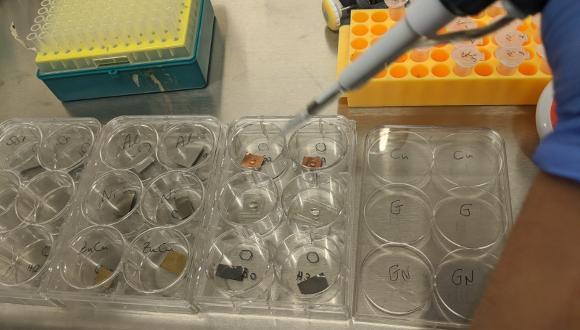

Evidences that the Covid-19 is transmitted through aerosols and surfaces made scientists all over the world search for effective methods of disinfection. Dr. Ines Zucker from the School of Mechanical Engineering and the Porter School of Environmental Science, together with Dr. Yinon Yecheskel, the manager of the Zucker Lab, is taking part in the global fight against the pandemic. In recent days, Dr. Zucker and Dr. Yecheskel are working on practical methods to enable the use of ozone gas as a safe and potent disinfectant against SARS-CoV-2 virus.

Ozone is mostly known as a protective layer in the Earth`s stratosphere that absorbs dangerous ultraviolet wavelengths and protect us from harmful radiation. At ground level, ozone is a toxic gas that can cause health issues and overall, is considered as an air pollutant. However, ozone is also known as a strong oxidant that is used in water and wastewater treatment. Dr. Ines Zucker and her team try to adapt the method whereby they use ozone to break down water contaminants and apply it to disinfect Coronavirus from infected surfaces and aerosols.

“We generate ozone through electrical discharge of oxygen gas, and typically use the mixed stream to oxidize chemicals in water. Now, we proved the potential use of ozone-gas disinfection to combat the COVID-19 outbreak”- says Dr. Zucker. Through process engineering, ozone can be safely used for air disinfection, while minimizing exposure to ozone residues in treated air. The advantage of ozone over other common disinfectants (such as alcohol) is its ability to disinfect hidden objects and indoor air, and not just exposed surfaces.

Moreover, the researchers found a safe, non-contagious model of the SARS-CoV-2 virus which they are using to accelerate their research on ozone disinfection.

“We paved the way towards a promising ozone-based disinfection method, and now we are continuing our research and examining optimal conditions to minimize infectivity as well as ozone residues in the treated area”– concludes Dr. Zucker.

Photo: on the right, Dr. Joel Alter, Dr. Moshe Dessau, Dr. Yinon Yehezkel and Dr. Ines Zucker

The research was conducted in collaboration with Dr. Moshe Dessau from the Faculty of Medicine at Bar Ilan University, and Dr. Yaal Lester from Azrieli College of Engineering in Jerusalem. The preliminary findings of the study were published in the journal Environmental Chemistry Letters.

Loving the problem is the greatest way to invent